Introduction

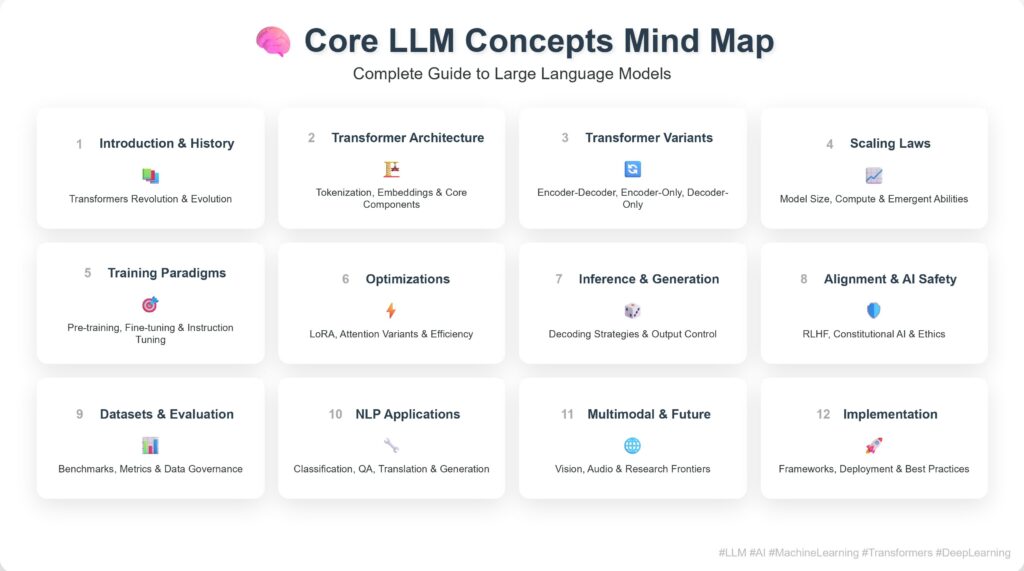

Welcome to my postsseries on the inner workings of Transformers, with a focus on their application in large language models (LLMs). Over time, I’ll break down key components—like self-attention, positional encoding, and layer normalization—highlighting how they contribute to the remarkable capabilities of modern LLMs. Each part of this post will explore one element in depth, grounded in both theoretical understanding and practical relevance. The goal is to make these complex systems more transparent and accessible, especially for those interested in research, development, or curious exploration. Let’s begin this journey into the architecture behind today’s most powerful AI models.