Interacting with AI via prompts—i.e., text instructions—seems simple, but it hides plenty of surprises. In recent years (2023–2025), researchers have intensively analyzed how the form and style of our queries affect the responses of large language models (LLMs). It turns out that some popular beliefs about the “art of asking questions” need revisiting. Below are five surprising, research-backed truths about prompts. These findings give AI conversations a more scientific edge—and might change how you formulate instructions.

Author Archives: Marcin

Taming Long Context in LLMs: 3 Problems, 1 Cohesive Set of Strategies

In practical LLM systems (QA, analytics, assistants, agentic RAG), three phenomena routinely degrade quality: (1) Lost in the Middle — accuracy drops when the key evidence sits in the middle of a long prompt; (2) Distracting prompts — a few “tempting” sentences derail reasoning; (3) Very large contexts → performance drop — despite advertised 32k+ windows, results and stability degrade. Below: why this happens, what works “right now,” what to implement in the model/pipeline, and how to measure it rigorously.

TL;DR for the impatient

- Instead of stuffing everything into the prompt: retrieval → cross-encoder reranking → compression → extreme ordering (most important at the beginning and end).

- Limit distraction with a simple instruction + answer format, few-shot with “noise,” self-consistency, and gating/abstention (NO-RESPONSE) at the passage level.

- Stabilise long context via position scaling (LongRoPE/YaRN), a training regime for long sequences (ProLong), test-time adaptation (LIFT), streaming attention with sink tokens and/or external memory.

- Measure smartly: not only “needle-in-a-haystack.” Use RULER/ONERULER (also multilingual), multi-needle tests, and real tasks with source citation.

Revolution in the Test Tube: How AI Agents Are Transforming Scientific Research

From Data Analysis to a Partner in Discovery: Artificial intelligence in science is undergoing a profound transformation. For years it was seen mainly as a tool for analysing large datasets. Today we’re witnessing an evolution from a passive analyst to an active partner in research — AI systems can help formulate hypotheses, design experiments, and interpret results (always under human oversight). This trend is well documented in 2025 surveys and reports, including the Stanford AI Index 2025 and the State of AI Report 2025.

5 Surprising Truths About the AI Revolution

We live in a time when technological change is happening faster than ever. This sense of acceleration isn’t just a subjective impression—it’s a measurable reality. As early as 1999, Vint Cerf, one of the fathers of the internet, observed that one year in the internet industry was like seven “dog years.” That comparison, once apt at capturing the pace of innovation, now seems insufficient in the context of artificial intelligence. The speed at which AI is reshaping our world is unprecedented—faster than in previous technology waves, including the internet era. The amount of data and analysis on the topic is overwhelming, and media narratives often swing between utopian excitement and dystopian fear. Yet beneath the headlines lie hard numbers that paint a far more nuanced and fascinating picture.

In this article, I present five of the most surprising and counterintuitive findings from the latest analyses. They help explain the true nature of the AI revolution—its unprecedented speed, paradoxical economics, geopolitical tensions, impact on the physical world, and the fundamental shift in the labor market. These are truths worth knowing to navigate the era ahead with intent.

The Anatomy of a Scientific Review: Surviving the Ultimate Judgment in Computer Science and Medical Sciences

You hit “Submit” and then… silence. Months of work, hundreds of edits, one click—and the long wait for a verdict from those enigmatic figures: Reviewer 1, Reviewer 2… It’s one of the most stressful moments in a researcher’s life. But what actually happens on the other side? Peer review is the backbone of science—a quality-control system meant to ensure that published work is important, original, and rigorous (Sense about Science).

A Researcher’s Roadmap: A Practical Framework for Rigorous Science

After many years spent in research, the scientific process—from idea to publication—becomes second nature. However, this intuition, though invaluable, deserves to be structured. The desire to describe this workflow stems not only from a need to better understand my own work but also from the desire to create a map that can help others navigate this complex terrain.

One inspiration was a humorous but accurate list from the book “We Have No Idea: A Guide to the Unknown Universe” by Jorge Cham and Daniel Whiteson:

- Organize what you know

- Look for patterns

- Ask questions

- Buy a tweed jacket with elbow patches

However, scientific work is, above all, the art of asking the right questions. It’s not about “beating the baseline” but about understanding a phenomenon. The question “why?” is a researcher’s compass. In turn, understanding often means the ability to reconstruct a mechanism (e.g., by implementing code or a formal proof), although in some areas of mathematics, a complete, verifiable line of reasoning is sufficient.

I have noticed that whether I am writing an empirical paper in Natural Language Processing (NLP) or a systematic review with a meta-analysis, a common skeleton lies beneath the surface. The result of these observations is the working framework below, which attempts to visualize this skeleton.

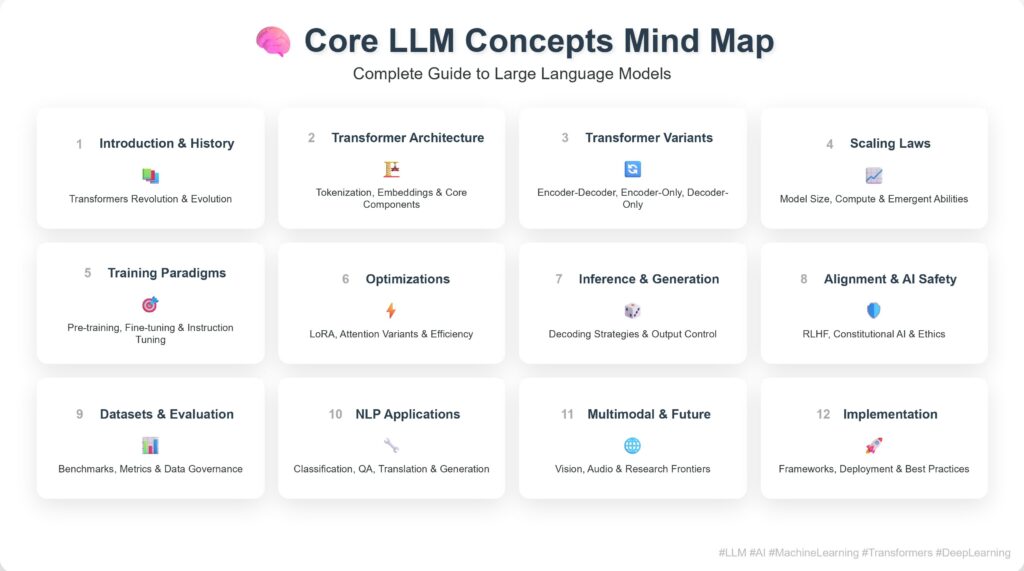

Transformer – Roadmap

Introduction

Welcome to my postsseries on the inner workings of Transformers, with a focus on their application in large language models (LLMs). Over time, I’ll break down key components—like self-attention, positional encoding, and layer normalization—highlighting how they contribute to the remarkable capabilities of modern LLMs. Each part of this post will explore one element in depth, grounded in both theoretical understanding and practical relevance. The goal is to make these complex systems more transparent and accessible, especially for those interested in research, development, or curious exploration. Let’s begin this journey into the architecture behind today’s most powerful AI models.

Unveiling Dual Quality in Product Reviews: An NLP-Based Approach

Abstract

Consumers often face inconsistent product quality, particularly when identical products vary between markets, a situation known as the dual quality problem. To identify and address this issue, automated techniques are needed. This paper explores how natural language processing (NLP) can aid in detecting such discrepancies and presents the full process of developing a solution. First, we describe in detail the creation of a new Polish-language dataset with 1,957 reviews, 540 highlighting dual quality issues. We then discuss experiments with various approaches like SetFit with sentence-transformers, transformer-based encoders, and LLMs, including error analysis and robustness verification. Additionally, we evaluate multilingual transfer using a subset of opinions in English, French, and German. The paper concludes with insights on deployment and practical applications.

Reflections on Document Classification Research: Insights from a Systematic Review

Recently, I published an article titled “The Outcomes and Publication Standards of Research Descriptions in Document Classification: A Systematic Review.” The study analyzed over 100 research papers to identify trends, challenges, and gaps in document classification research.

Due to space limitations, many interesting observations and insights did not make it into the final publication. Instead, they were documented in the accompanying technical report available on GitHub. In this post, I want to highlight some of these additional insights and discuss what they mean for future research in document classification.

Continue readingThe Outcomes and Publication Standards of Research Descriptions in Document Classification: A Systematic Review

Abstract

Document classification, a critical area of research, employs machine and deep learning methods to solve real-world problems. This study attempts to highlight the qualitative and quantitative outcomes of the literature review from a broad range of scopes, including machine and deep learning methods, as well as solutions based on nature, biological, or quantum physics-inspired methods. A rigorous synthesis was conducted using a systematic literature review of 102 papers published between 2003 and 2023. The 20 Newsgroups (bydate version) were used as a reference point of benchmarks to ensure fair comparisons of methods. Qualitative analysis revealed that recent studies utilize Graph Neural Networks (GNNs) combined with models based on the transformer architecture and propose end-to-end solutions. Quantitative analysis demonstrated state-of-the-art results, with accuracy, micro and macro F1-scores of 90.38%, 88.28%, and 89.38%, respectively. However, the reproducibility of many studies may need to be revised for the scientific community. The resulting overview covers a wide range of document classification methods and can contribute to a better understanding of this field. Additionally, the systematic review approach reduces systematic error, making it useful for researchers in the document classification community.